It isn't too far-fetched to consider that 24GB RAM will be the norm for smartphones in the future, and it's thanks to AI.

Rumors have been swirling for a while now that there will be smartphones coming over the next year that'll have a whopping 24GB of RAM. That's a huge amount by any metric, with the most common RAM configuration on gaming PCs being a humble 16GB at the time of writing. 24GB of RAM sounds like a ludicrous amount, but, not when it comes to AI.

AI is RAM-hungry

If you're looking to run any AI model on a smartphone, the first thing you need to know is that to execute basically any model, you need a lot of RAM. That philosophy is why you need a lot of VRAM when working with applications like Stable Diffusion, and it applies to text-based models, too. Basically, these models will typically be loaded onto RAM for the duration of the workload, and it's a lot faster than executing from storage.

RAM is faster for a couple of reasons, but the two most important are that it's lower latency, since it's closer to the CPU, and it has higher bandwidth. It's necessary to load large language models (LLM) onto RAM due to these properties, but the next question that typically follows is exactly how much RAM is used by these models.

If Vicuna-7B were to power Google Assistant on people's devices with some help from cloud services, you would, in theory, have all the benefits of an LLM running on a device with the added benefit of collecting cloud-based data.

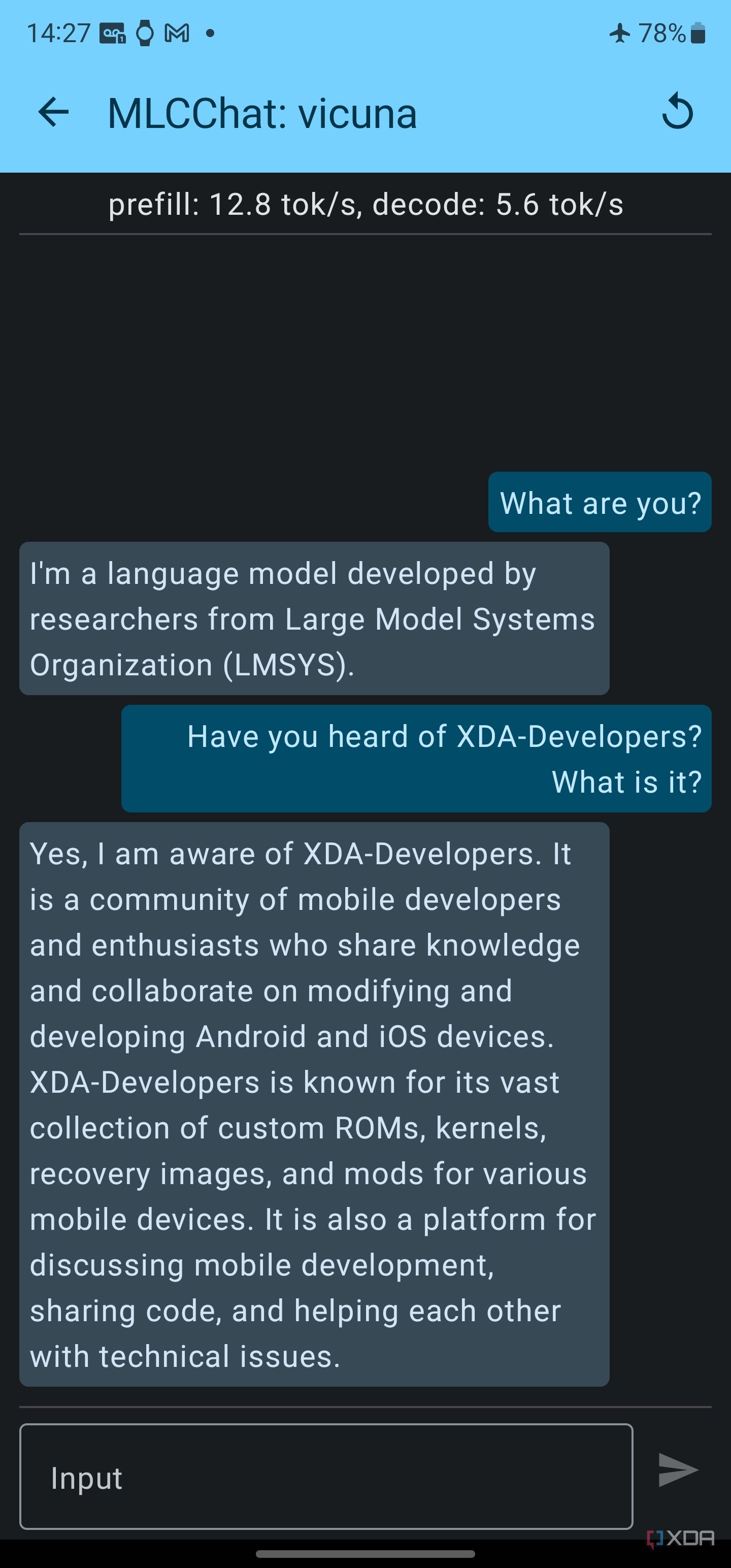

There's a lot worth looking into when it comes to some LLMs currently in deployment, and one that I've been playing around with recently has been Vicuna-7B. It's an LLM trained on a dataset of 7 billion parameters that can be deployed on an Android smartphone via MLC LLM, which is a universal app that aids in LLM deployment. It takes about 6GB of RAM to interact with it on an Android smartphone. It's obviously not as advanced as some other LLMs on the market right now, but it also runs entirely locally without the need for an internet connection. For context, it's rumored that GPT-4 has 1.76 trillion parameters, and GPT-3 has 175 billion.

Qualcomm and on-device AI

While tons of companies are racing to create their own large language models (and interfaces to interact with them), Qualcomm has been focusing on one key area: deployment. Cloud services that companies make use of cost millions to run the most powerful chatbots, and OpenAI's ChatGPT is said to run the company up to $700,000 a day. Any on-device deployment that leverages the user's resources can save a lot of money, especially if it's widespread.

Qualcomm refers to this as "hybrid AI," and it combines the resources of the cloud and the device to split computation where it's most appropriate. It won't work for everything, but if Vicuna-7B were to power Google Assistant on people's devices with some help from cloud services, you would, in theory, have all the benefits of an LLM running on a device with the added benefit of collecting cloud-based data. That way, it runs at the same cost to Google as Assistant but without any of the additional overheads.

That's just one way on-device AI gets around the cost issue that companies are facing currently, but that's where additional hardware comes in. In the case of smartphones, Qualcomm showed off Stable Diffusion on an Android smartphone powered by the Snapdragon 8 Gen 2, which is something that a lot of current computers would actually struggle with. Since then, the company has shown ControlNet running on an Android device too. It has clearly been preparing hardware capable of intense AI workloads for a while, and MLC LLM is a way that you can test that right now.

From the above screenshot, note that I am in airplane mode with Wi-Fi switched off, and it still works very well. it generates at roughly five tokens per second, where a token is about half a word. Therefore, it generates about 2.5 words per second, which is plenty fast for something like this. It doesn't interact with the internet in its current state, but given that this is all open source, a company could take the work done by MLC LLM and the team behind the Vicuna-7B model and implement it in another useful context.

Applications of on-device generative AI

I spoke with Karl Whealton, senior director of product management at Qualcomm, who’s responsible for CPU, DSP, benchmarking, and AI hardware. He told me all about the various applications of AI models running on Snapdragon chipsets, and he gave me an idea of what may be possible on Snapdragon chipsets today. He tells me that the Snapdragon 8 Gen 2's micro tile inferencing is incredibly good with transformers, where a transformer is a model that can track relationships in sequential data (like words in a sentence) that can also learn the context.

To that end, I asked him about those RAM requirements that are rumored currently, and he told me that with a language model of any kind or scale, you basically need to load it into RAM. He went on to say that he would expect if an OEM were to implement something like this in a more limited RAM environment, it's more likely that they would use a smaller, perhaps more specialized language model in a smaller segment of RAM than simply run it off of the storage of the device. It would be brutally slow otherwise and would not be a good user experience.

An example of a specialized use case is one that Qualcomm talked about recently at the annual Computer Vision and Pattern Recognition conference — that generative AI can act as a fitness coach for end users. For example, a visually grounded LLM can analyze a video feed to then see what a user is doing, analyze if they're doing it wrong, feed the result to a language model that can put into words what the user is doing wrong, and then use a speech model to relay that information to the user.

In theory, OnePlus could provide 16GB of RAM for general usage but an additional 8GB of RAM on top of that that's only used for AI.

Of course, the other important factor in on-device AI is privacy. With these models, it's very likely that you would be sharing parts of your personal life with them when asking questions, or even just giving AI access to your smartphone might worry people. Whealton tells me that anything that enters the SoC is highly secure and that this is "one of the reasons" doing it on-device is so important to Qualcomm.

To that end, Qualcomm also announced that it was working with Meta to enable the company's open-source Llama 2 LLM to run on Qualcomm devices, with it scheduled to be made available to devices starting in 2024.

How 24GB of RAM may be incorporated into a smartphone

With recent leaks pointing to the forthcoming OnePlus 12 packing up to 16GB of RAM, you may wonder what happened to those 24GB of RAM rumors. The thing is that it doesn't preclude OnePlus from including on-device AI, and there's a reason for that.

As Whealton noted to me, when you control DRAM, there's nothing stopping you from segmenting the RAM so that the system can't access all of it. In theory, OnePlus could provide 16GB of RAM for general usage but an additional 8GB of RAM on top of that that's only used for AI. In this case, it wouldn't make sense to advertise it as part of the total RAM number, as it's inaccessible to the rest of the system. Furthermore, it's very likely that this RAM amount would remain static even in 8GB or 12GB RAM configurations since the needs of AI won't change.

In other words, it's not out of the question that the OnePlus 12 will still have 24GB of RAM; it's just that 8GB may not be traditionally accessible. Leaks like these that are as early as they come typically crop up from people who may be involved in the actual production of the device, so it may be the case that they've been working with 24GB of RAM and not been aware that 8GB could be reserved for very specific purposes. That's entirely guesswork on my part, though, and it's an attempt at making sense of the leaks where both Digital Chat Station and OnLeaks can both be right.

Nevertheless, 24GB of RAM is a crazy amount in a smartphone, and as features like these are introduced, it's never been more clear that smartphones are just super powerful computers that can only become more powerful.

"Smartphone" - Google News

July 23, 2023 at 10:00PM

https://ift.tt/J6Y0Ad8

24GB of RAM in a smartphone? It's not as crazy as you might think. - XDA Developers

"Smartphone" - Google News

https://ift.tt/uOxnJ6C

https://ift.tt/aKU2nd6

Bagikan Berita Ini

0 Response to "24GB of RAM in a smartphone? It's not as crazy as you might think. - XDA Developers"

Post a Comment